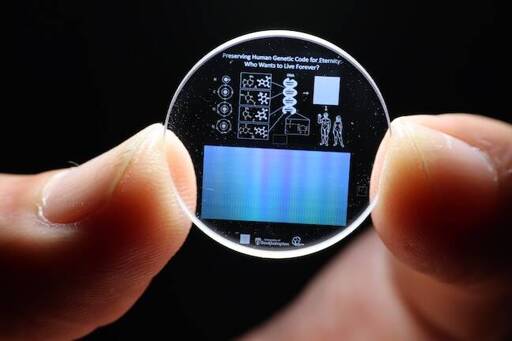

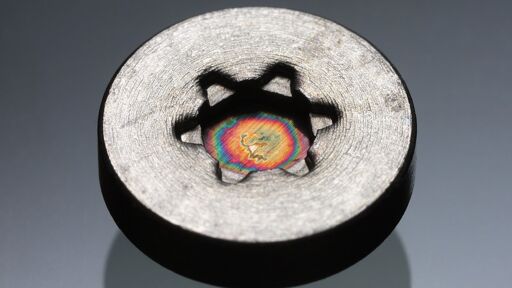

What if some civilization in the past already had something like this, and there are ‘plates’ or pieces of rock out there (under sand dunes? written in the sides of those vases from ancient Egypt?)

Could they make portable readers that can at least spot old pottery chunks that are probably FULL of videos?

LOL I would NOT be surprised !!